Digital Image: Reset

Instructor: Eric Rosenthal

Week 13:

Laser spectrometry. Raman spectrometry is able to determine the chemical composition of the target. Can be used to determine cell health on small cell samples (kills single cell) can determine whether a cell is healthy or cancerous. Can be used to determine chemical composition of any surface. The reflected wavelengths are complex, for a wall it can include titanium dioxide, fungus, etc. Database analysis can narrow it down to the constituent parts. The notch filter is the most expensive part. X-ray spectrometer to determine metal content.

Week 12:

New Theories of Human Vision

Interlace versus progressive scan. Interlace was developed to save bandwidth on analog, vacuum tube technology. NTSC was the standard. Progressive scan did not start to be considered for television in the 1990s, still in an analog framework. Nick Negroponte wanted to bring in Hitachi to the Television Standards Group (despite charter restricting membership to American companies). Rosenthal and his associates left the group to go to MIT electronics lab where digital imaging was being developed. The researchers showed the images to the FCC and it was decided to switch to digital. Sony and NHK had already spent big money on HD analog. In part the decision was made to free up bandwidth spectrum for fire, police, cellular phones etc. FCC made no decisions on a single standard. 18 different television standards, 18 different decoding types, complex and expensive decoders delayed adoption of digital television. Sony fought to keep interlace standards. CBS uses interlace digital standards. Rosenthal became head of R and D for Disney and ABC, decided to go with progressive scan throughout. Mix of standards remains. Quality is still pretty lousy. Missed opportunity to go to a higher standard.

Human vision mismatch for digital image standards. Defense department funds research into human vision system. Throw out old tech and redesign from the ground up. All vision scientists know that the current theory of human vision is wrong. The actual mechanism at work is still poorly understood. In the UK vision tests in schools are tied to ancestry information. Ms. Herbert is investigating tetrachromacy at the University of Newcastle on Tyne. Nearly all tetrachromats are daughters of colorblind men. No difference in cone chemistry. Only difference between father and daughter eyes is cone size.

Human vision system does not operate as a photoreceptor, otherwise we would see noise and chromatic aberration. The human eye is a wave detector. Macular degeneration studies show that cones do not degrade, rather the muscle that moves the cone goes bad. In the morning your eyes are aligning themselves. The muscle gets calcified, cannot tune itself for maximum tuning.

1/7 of a second intervals for brain processing of images, yet color processing is done in nanoseconds. If cones are wavelength detectors color detection can be done quickly in the cones instead of the brain. Rhodopsin reacts to light in nanoseconds.

Rosenthal returns to Office of Naval Research and gets go ahead to calculate Maxwell’s equations for eye cone microtubes, and build micro antennas for lightwave detection. Dr. Dennis Prather at University of Maryland had the equipment to do the research. Maxwell’s equations were run on the cones on supercomputers and the results were identical to the visual acuity of the eye. Cones have double spiral structure to cancel out noise for wave detection.

Gallium nitride developed by raytheon for solid state transmitters for radar. Gallium nitride can be used for linear detection of light waves.

97 gigahertz water window, sees through concrete walls developed for military use.

1 million dollars and 6 weeks later, one pixel 1024 primary light generator. Heterodyning using gigahertz cell phone tech (readily available). Receive and display images that are beyond our visual range. Technology would not require a lens, just a sensor. Pan, focus, and zoom in software. The wave phase detection allows “listening” to the image.

2-5 nanometer color resolution for human vision. 250 increments in color resolution. 10 to the 10th power dynamic range. Complex visual processing supplemented by other senses. layered perception (layered data perception) edge detection, color detection before object recognition. Retina is refreshed every morning, about a 10th of it is ejected. Long term sleep deprivation degrades the visual system. Camera sensors require lighting to fix.

Aqueous humor acts as a light balance reference. Retina is protected from UV and Infrared so that they are not damaged.

Human vision system is continuous, no frame rate. Retinal cells detect randomly, not progressive scan. No noise or moire. Many artificial lights flicker. The eye can detect micro luminance changes that show textures like fabric fuzz. Hyperspatial acuity from phase detection.

Human Vision System: color, detail, smell, audio, motion, spatial, form, object recognition

MPEG: motion, detail, color

Human beings are difference engines, they detect differences. Eyes are always in motion (saccades) 1000 times a second, analysing differences. Even with one eye we get micro 3D depth perception.

Only a thousand neurons go to the brain delivering the equivalent to 3 terabytes per second. We do not know how the information is structured when it is sent to the brain. It may be the reason why we are subject to certain optical illusions. Pulses are encoding a large amounts of information. Cones have 1500 microtubules for wave detection.

C-tech patents:

1. Nano-antennas each pixel acts as a spectrometer. Radar for lightwaves.

2. Full spectrum generator

3. In software lensing, zoom, etc.

Everything about current imaging tech destroys information. C-tech gets lossless output from original data, allows for sophisticated analysis that would be otherwise impossible. Useful for medical, research, surveillance, etc.

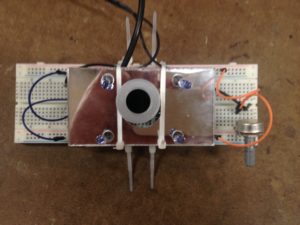

Final Project: Infrared Photography Using Low Resolution Web Cam

Concept: By removing the OEM infrared filter and adding an infrared pass filter lens, the webcam is converted to an infrared camera. With a simple array of Infrared LEDs pictures can be taken in complete darkness. Using panorama shooting, and stitching techniques produces a higher resolution image than the camera is normally capable of is possible.

Process: First the infrared filter is removed from the lens assembly of the webcam using an exacto blade. An infrared pass filter is mounted over the lens assembly using a 3D printed part. The infrared LED circuit is assembled on a breadboard with a potentiometer for luminance control, tissue paper acts as a diffuser.

This assembly was tested but caused the webcam to fail.

A reference shot in normal light:

In infrared with no other illumination except for LED array:

Camera setup:

Tripod, clamp, toy iguana, black felt background. The subject was moved incrementally across the table beneath the camera with only the LED array to illuminate. 15 shots are captured and stitched together manually.

Resulting image:

Working with such a low resolution camera was pretty frustrating. It was difficult to focus, tricky to pair with my laptop, and noisy. To get better results would require a giga cam type stepper motor setup. Hacking the firmware might also be an option.

Week 11:

Hard drives eventually wear out because they have moving parts. SSDs are only guaranteed for a set number of read and write cycles.

Printer technology. Ink Jet. Bubble Jet. Matrix dot.

Heating up the ink so that it bubbles out of tiny holes. Piezo with tiny holes.

Double printing to get better saturation.

Laser printers. The drum is photosensitive, maintains a static charge, cannot ever be touched. It maintains a charge until it is exposed to bright light. The discharge lamp erases it, a corona wire with high voltage or radioactivity. The drum is uniformly charged. The laser discharges all areas to remain white. Toner hopper, fine plastic particles that are attracted to the charged parts of the roller. The paper is positively charged and then attached to the drum, the paper is passed through hot rollers that fuse the particles to the paper at around 300 degrees (near the burning point of paper). The paper is passed through a path that cools the paper down before spitting it out.

Data centers are being constructed adjacent to power plants due to the energy consumption. Most of the energy is used to cool the data center because the a magnetic field is destroyed at high temperature.

Write once read forever. WORF. Archival data storage is a huge challenge.

Accountants took over in the 90s. Electrical systems get put in basements, because it’s cheaper; then floods fuck it up.

Everything is run through the metric of cost. No other considerations are taken.

Everything is run based on how wall street will feel about your business next quarter. Business leaders have no core competencies. Businesses used to be run by people with core competencies. Disney used to have five year plans.

Hitachi does a hundred year plan. R and D tax credits were phased out in the US, companies lost ground. Congress responded with a botched solution where the R and D tax credit arrives in March, not enough time to use it, no guarantee that it will renew.

Dumbo naval yard startups, not run by accountants.

Week 10:

Photos using a flash, with and without diffuser, with first and second curtain settings.

A femtosecond laser can produce aggregate waveforms that covers the entire electromagnetic spectrum. Only visible light waves get through water. A cold mirror reflects visible light, absorbs infrared. DLP from Texas Instruments, array of millions of microscopic micro-mirrors, moved with tiny charges of electricity, they are kept in a vacuum, can last nearly forever. Break the vacuum seal and you will see a puff of glittery dust that is thousands of microscopic mirrors breaking.

Flash photography. Use a diffuser, get your flash timing correct. Use slow synchronization for flash photos.

Time lapse requires a lot of planning. Especially to keep the lighting correct.

Using sensors to trigger a photo flash with the shutter open in a dark room to capture fast events.

Week 9:

3D movies captured with straight on cameras is a mismatch to human vision. We end up seeing layered depth. It stresses the visual system. Sliding camera plate can be used to create 3D images with a single camera. You can trick it with a weight shift to each leg, you can also do fixes in post.

Layered focus, software combines several pictures (some of the image in focus, some not) so that everything is in focus. Kind of like HDRI but for focus.

Layered Focus image

Same subject with no layering

Hyperfocal distance, the lens settings where the maximum amount of the image is in focus. This is the setting that can capture fast moments where the time it would take to autofocus is too slow. Unfortunately it is different for every combination of camera and lens type. Online calculators can help.

Timelapse: Takes planning. CHDK and Magic Lantern can allow you to hack your camera to do more than the manufacturer programmed the camera to do by default.

Week 8:

Interocular distance is the width between eyes, different for different people. Depth is generated from depth disparity

Depth disparity is processed in our brain to generate a spatial relationships.

Our eyes point inwards at a angle, where the vectors from each pupil intersect is where we have the greatest resolution and focus. Planar point: distance where eyes converge to one image 2D. Everything in front and in back of the planar point has depth information.

Ortho Stereoscopic eyes canter in at an angle.

Parallel axis is used for 3D movies have two cameras pointing dead ahead. It only creates “layered 3D”.

Stereoscopic 3D scenes stress out the perception system.

Depth info beyond 18 feet is flat.

Focus layering for macro that has a focus area that is too narrow.

Week 7:

It is possible to use a spotting scope or other inexpensive telescope to get a photograph equivalent to an extreme telephoto lens. There may be extreme vignetting.

HDRI High Dynamic Range Imaging, three exposures are used to create an image that has detail in shadows, well lit areas, and medium lighting levels. The subject must be stationary, or at least not fast moving. Some HDRI is done in camera. Photomatix, FDR Tools, Hugin, and HDR Shop are examples of HDRI software. Paul Debevec pioneered HDR imaging as well as several other breakthroughs in imaging and 3D animation.

Panorama photography, stitch together multiple images into a larger image. You must lock exposure and light balance to get the sequence to match. Use a tripod and make sure your bubble level is spot on. Gigapan type photos can create incredibly large and detailed images through similar stitching. Panoramas can be done horizontally, vertically, or both.

Problem: lens distortion and chromatic aberration

Software: DXOptics (expensive) or PTLens free. When cameras are developed, tests are made on the lens by the manufacturer and an information table is created. All new cameras create exif files, information about the photo.

Exif example from wikipedia:

| Tag | Value |

|---|---|

| Manufacturer | CASIO |

| Model | QV-4000 |

| Orientation (rotation) | top-left [8 possible values[20]] |

| Software | Ver1.01 |

| Date and time | 2003:08:11 16:45:32 |

| YCbCr positioning | centered |

| Compression | JPEG compression |

| X resolution | 72.00 |

| Y resolution | 72.00 |

| Resolution unit | Inch |

| Exposure time | 1/659 s |

| F-number | f/4.0 |

| Exposure program | Normal program |

| Exif version | Exif version 2.1 |

| Date and time (original) | 2003:08:11 16:45:32 |

| Date and time (digitized) | 2003:08:11 16:45:32 |

| Components configuration | Y Cb Cr – |

| Compressed bits per pixel | 4.01 |

| Exposure bias | 0.0 |

| Max. aperture value | 2.00 |

| Metering mode | Pattern |

| Flash | Flash did not fire |

| Focal length | 20.1 mm |

| MakerNote | 432 bytes unknown data |

| FlashPix version | FlashPix version 1.0 |

| Color space | sRGB |

| Pixel X dimension | 2240 |

| Pixel Y dimension | 1680 |

| File source | DSC |

| Interoperability index | R98 |

| Interoperability version | (null) |

Problem: Noise

Best to avoid capturing it in the first place. Software: Neat Image, Noise Ware. All sensor have imperfections, due to gamma rays, sensors are scanned and the camera is told to ignore the hot or dead pixels. On camera software compensates by generating information of the eight surrounding pixels. Graphic Converter for hot and dead pixels.

Problem: Skin Tones: Icorrect Portrait.

Light Field Photography, from a single light field image you can create a 3D image set.

Week 6:

IR long pass filter makes heat signatures visible.

The glass sensor in a digital camera reflects 20% of the light that reaches the sensor , digital camera lenses have an anti-reflection coating to prevent double image artifacts.

Black body radiation, measured in degrees Kelvin. Sunlight is at 65,000 degrees Kelvin (more or less).

Halogen lights are hot and dangerous but still produce high quality light. LEDs are safer, better color temperature, but emit less light.

Lighting setups:

Portrait – Light for the background, hair light (up high), key light (lights up the face), and an eye light (adds light to the eyes), camera is just above the eye light, high enough so that we are not seeing underneath the chin (unflattering).

Week 5:

Assignment: Monochrome photograph

This photo was shot on a Canon 6D camera with a zenit 85mm Jupiter manual lens. The camera was mounted on a tripod and an F&V LED ring lamp was used to light the subject. The camera was set to manual light balance and set with the grey card. The monochrome setting was used but the raw image file still had color so I desaturated it in the Photoshop Camera RAW software. Compared to the color image the black and white looks sharper and more like a vector graphic with crisper delineation in the grain of the wood and other details.

Assignment: Depth of Field

Same setup and procedure as the monochrome. F2 – F16 aperture difference.

Week 4:

Color separations in film photography. Sergey Mikhaylovich Prokudin-Gorsky (1863 – 1944) a Russian chemist and photographer developed the first RGB color separation process. His work went unrecognized due largely to the limitations of projection systems of the time, until it was sold to the library of congress and rediscovered by a student who recognized the plates as 3 color separations. With modern technology these 100 year old plates can achieve a color gamut better than current cameras!

Workflow: Switch camera to black and white mode. Take an exposure for each filter R, G, B, and open them in a photo editing program, change mode to grayscale, combine them in channel mixer.

red # 25 filter

green # 58 filter

blue # 47 filter

Week 3:

Assignment: Bracketed Exposures

The setup:

The results:

Fun with my macro bellows and tethered shooting:

It took some fiddling with the controls but eventually figured out how to make the Canon 6D automatically perform bracketed exposures. I also found out how to disable the mechanical mirror movement. I set up the subject on a seamless paper backdrop with three point led lighting, and the camera on a tripod. I used a Zenit Jupiter 85mm lens. I also experimented with my macro bellows to get some close up shots. For the close up shots I tethered the camera and triggered the exposure using the EOS utility. The bracketed exposure feature is very useful for 3D animation. HDRI images can be used to create lighting for virtual objects and scenes in a way that leans makes them look as if they exist in the real world.

Always use the highest file size and quality. Problems with using flash for photography: it’s a point light that creates hard shadows, it is not full spectrum (leds have better quality light).

Abrupt change in lighting is hard for digital cameras to reproduce.

Weight your tripod to dampen vibration as much as possible. If you can’t use a tripod, brace the camera against a solid object (wall, chair, table, etc.).

Always slightly underexpose your image. Blown-out regions of your image have no information. An underexposed image can be fixed in post. Leave space on the white side of the histogram.

F 5.6 is the setpoint for lens manufacturers to capture the most detail.

Always take spare batteries.

An optical low pass filter (frosted glass) is in front of the sensor to prevent moire patterns from showing up.

Exposure bracketing, flash exposure, and white balance bracketing are features available with some digital cameras.

Polarizing filters block wavelengths of light coming from certain directions (not directly into the camera lens), circular is better. Cuts down on reflected light, especially good for photographing water. Makes skies bluer.

Week 2:

All cameras sensors are black and white sensors. We use tricks to make the sensor act as a color sensor. The Bayer array is one such trick developed in the 1950’s. The Bayer array is an array of filters that separate light into 1/2 green, 1/4 red, and 1/4 blue. The filters in the camera decrease light sensitivity. The filters are created by a series of masking and vapor deposition. Simplified, unidirectional photons were invented to make the math easier (trigonometry versus Maxwell’s equations) for the creation of complex lens systems. Einstein won his Nobel Prize for the photoelectric effect, not for the theory of relativity. The absorption of a photon by certain materials causes the generation of an electron. Sensors are not very efficient at converting photons to electrons. The charges a sensor collects are stored in a micro capacitor. The nan0-voltage is amplified to get a high enough signal level to process.

CCD: Charged Coupled Device. Each photocell in the sensor has a capacitor. A nano-volt signal is amplified a million times. After amplification an analog to digital converter is used, 8 bit encoding takes the analog signal and assigns a value of 0-255. The process is done row by row in nanoseconds. Lots of parallel processing is done to increase the processing speed.

CMOS: Complementary metal–oxide–semiconductor. Less efficient but cheaper. Algorithms and tricks are used to make it look as good as CCD.

Supercomputing chips in digital cameras process the raw data on the fly.

All RAW processing is all proprietary. RAW is unprocessed data from the camera. A TIFF file is a RAW file with instructions AKA profile information (white balance, color balance, lens, etc.)

To calculate white balance the camera mixes all pixels to determine gray. Get’s it wrong most of the time. Use of a reference card creates a better and more accurate white balance than the camera can do on it’s own.

JPEG compression destroys information and creates terrible artifacts.

When taking a photo you want your tonal range to match the available information. Digital cameras have a dynamic sensor (no need for a light meter). In order to minimize the noise from the amplifier use the lowest ISO setting and do not hand hold. Moving the camera half a pixel cuts the accurate, usable information in half. The histogram shows whether or not you have a good exposure that is capturing as much of the available information as possible.

Preamble:

The subject is a censer from Columbia. The backdrop is a drab white table and white wall. The camera is a Canon 6D. The lens is a Russian made Zenit Helios 44 millimeter manual set at full aperture f2. Fun fact: Zenit cameras and lenses were manufactured based on specifications from the Carl Zeiss corporation after the red army plundered one of their factories during WWII. The camera was mounted on a cheap mini tripod on a second table, and the subject was evenly lit by a led ring lamp mounted around the body of the lens. ISO was set to it’s lowest level (100) and the shutter speed was adjusted down to compensate (1/80). The lens has a very shallow depth of field so only some of the surface details are in sharp focus.

1. Auto White Balance

Reviewing the automatically white balanced photo side by side with the manually white balanced photo it is clear that the color is not quite as rich or saturated. Overall it appears as though it was taken into Photoshop, and ever so slightly desaturated. However, the Canon white auto white balance does have the advantage of speed. For spontaneous photos it would be difficult to apply manual white balance with the gray card.

2. Manual white balance

Compared to the auto white balanced shot, the manual white balanced photo has a deeper, yellower golden color. Overall there is more color information, even on the wall behind the subject. Setting up manual color with the Canon 6D is somewhat counterintuitive. I think it could have a better workflow. I plan to try Magic Lantern, a software that adds additional functionality and manual control to the camera next time. Perhaps it will offer a more streamlined manual white balance setting procedure.

3. Manual white balance and histogram

To be honest I am not certain that my adjustments were ideal for this shot. The histogram display on the camera screen is pretty small, and monochromatic. By comparison I’ve worked with the 4K black magic camera before and it has a lovely large, full-color histogram display. At any rate the color is equally as rich as shot number two.

Post mortem:

A better, heavier tripod is a must for the next shooting assignment. I noticed that depressing the shutter release button definitely produces vibration. Next time I want to set up a timed release, or tether the camera to a laptop or smartphone to trigger an exposure.

Week 1:

“Changing the rules for capturing and printing digital imagery.”

How do you create the best possible images for the way human vision actually works.

Every picture submitted must come with a paragraph of text that explains the technical approach, intentions, etc.

Change blindness: Something is missing, it was there the whole time but was moved slightly.

The human vision system is superior to any device that we have built to date. Full spectrum of colors. 10 billion to 1 dynamic range. Continuous image capture.

Modes of human vision based on available light:

Scotopic vision: starlight, night vision, rods only, rods are only sensitive to blue, monochromatic, requires acclimation of several minutes.

Mesopic: moonlight, rods and cones, light balancing possible. Cones are filling in mostly red and green to add color, not the best for color discrimination.

Photopic: Indoor lighting, indirect sunlight, best acuity and color discrimination. Digital cameras only capture a small band, most activity on tv and movie shoots is the balancing of lighting.

Daylight spectra has gaps in its spectra called Fraunhofer lines.

RGB is a form of lossy data compression. It was based on invalid assumptions on the function of human vision. The technology that automatically does white balance and exposure is not correct. The technology that resolves white balance and exposure in software is not correct. Reduce post processing down to cropping.

“Tune image capture to vision system attributes”

As humans age, the aqueous humor changes from liquid to jelly. It changes the index of refraction, causing part of the inter-ocular phenomenon known as floaters.

The fovea, only 9 degrees, is where we have a high concentration of cones, this where are fine detail vision happens. Cone cells have a range of sizes which determine which wavelength of light they can detect. Tetrachromats have a wide range. The color blind have uniform cone size.

Cones are wavelength detectors (different colors) and rods are photon detectors (monochrome).

The human lens is a simple lens that does not compensate for chromatic aberration. The lens directs the waves towards the cones so that the waves line up to be detected. The cone bounces the light wave towards microtubules that vibrate at specific frequencies, they act as the detectors that send the signal to the brain.

Saccade: motion of the eye on low, medium, and high frequencies. Eye works on difference information. The neural network in the retina pre-processes the image. With saccade motion the six million cones have their resolution multiplied as information is taken from slightly different angles constantly. Human vision is not interlace or progressive scan, it is random sampling.

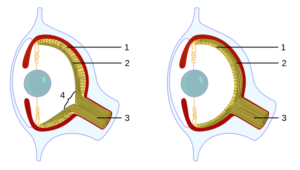

Fun fact: Vertebrate and Octopuses evolved complex eyes separately. Octopuses have the nerve fibers behind the retina, vertebrates have the nerve fibers in front.

By Caerbannog – Own Work, based on Image:Evolution_eye.png created by Jerry Crimson Mann 07:07, 2 August 2005 UTC (itself under GFDL)., CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=4676307